Debounce, what?

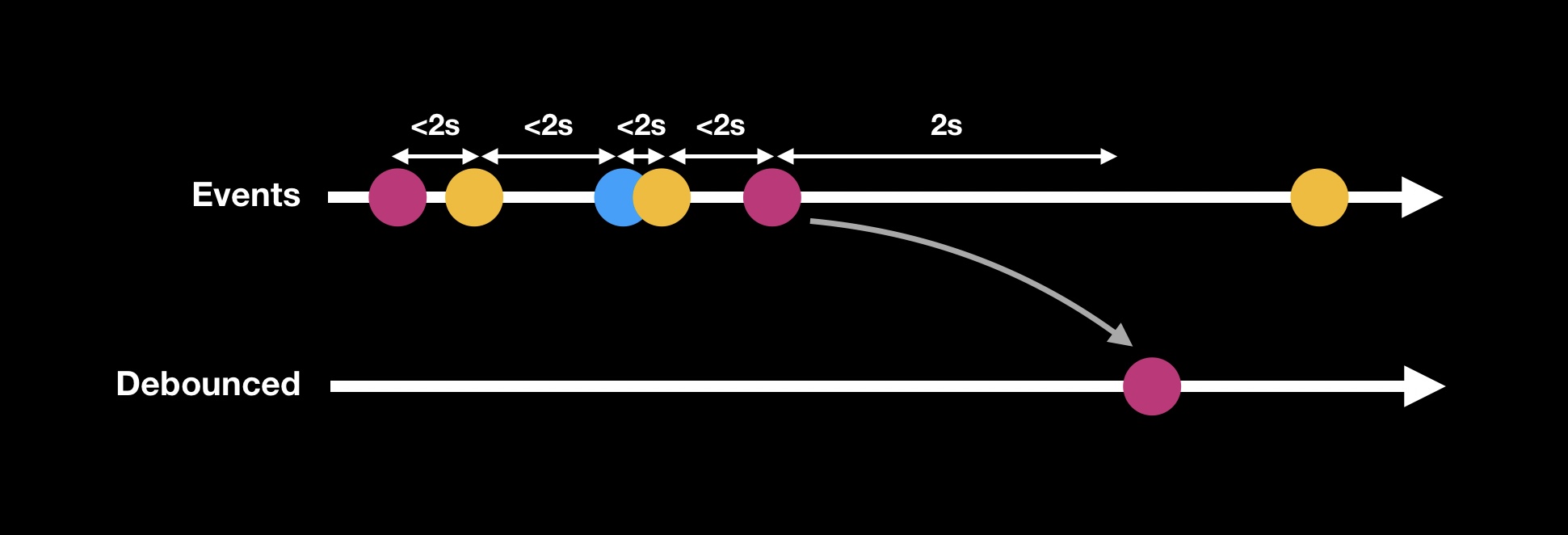

Before diving into the implementation we first need to understand what is a Debounce operation. Let’s use a marble diagram to visualize the behaviour:

The Debounce operator delays the emission of elements from a sequence of events until a certain amount of time has passed without the sequence producing another event. For example, if a search field is debounced for 2 seconds, a request will only be sent to the server if the user has not entered any additional input for at least 2 seconds. This helps to prevent intermediate events from triggering unnecessary requests.

A trivial implementation

To implement such a mechanism we need a way to wait for an amount of time but in a non blocking way. We don’t want to freeze the user input while we are measuring the elapsed time. We can use a Task as a support for asynchrony and Task.sleep() as a way to wait for the expected time.

In our implementation we will debounce the execution of a closure that takes a parameter.

final class Debounce<T> {

private let block: @Sendable (T) async -> Void

private let duration: ContinuousClock.Duration

init(

duration: ContinuousClock.Duration,

block: @Sendable @escaping (T) async -> Void

) {

self.duration = duration

self.block = block

}

func emit(value: T) {

Task { [duration, block] in

try? await Task.sleep(for: duration)

await block(value)

}

}

}

let debounce = Debounce<String>(duration: .seconds(2)) { event in

print(event)

}

debounce.emit(value: "1")

sleep(1)

debounce.emit(value: "12")

Let’s break it down:

- This class is generic over

Tto allow debouncing any data type. - The

blockis marked asSendable. As it is executed within aTaskit may be executed concurrently, and must be thread-safe to prevent race conditions. - The block is also marked as

async. Although this is not required, we can afford this since we already are in the context of aTask. - The

emit(_:)function receives a new value to debounce and creates a newTaskto wait for a specified duration before executing the block with that value.

Will it debounce? Well … no

It will execute the block for every given value, no matter the frequency of calls to the emit(_:) function. All it will do is delay the execution for the duration:

- T0: the program is launched

- T0 + 2s: the program prints “1”

- T0 + 3s: the program prints “2”

We need a way to cancel the previous task every time the emit(_:) function is called. To do that, we can call task.cancel(). If the previous task is still in progress, this will stop the task and end its execution. If the task was in the process of sleeping, the Task.sleep() operation will throw a CancellationError thanks to collaborative cancellation, preventing the block from being executed.

final class Debounce<T> {

private let block: @Sendable (T) async -> Void

private let duration: ContinuousClock.Duration

private var task: Task<Void, Never>?

init(

duration: ContinuousClock.Duration,

block: @Sendable @escaping (T) async -> Void

) {

self.duration = duration

self.block = block

}

func emit(value: T) {

self.task?.cancel()

self.task = Task { [duration, block] in

do {

try await Task.sleep(for: duration)

await block(value)

} catch {}

}

}

}

let debounce = Debounce<String>(duration: .seconds(2)) { event in

print(event)

}

debounce.emit(value: "1")

sleep(1)

debounce.emit(value: "12")

sleep(1)

debounce.emit(value: "123")

sleep(3)

debounce.emit(value: "1234")

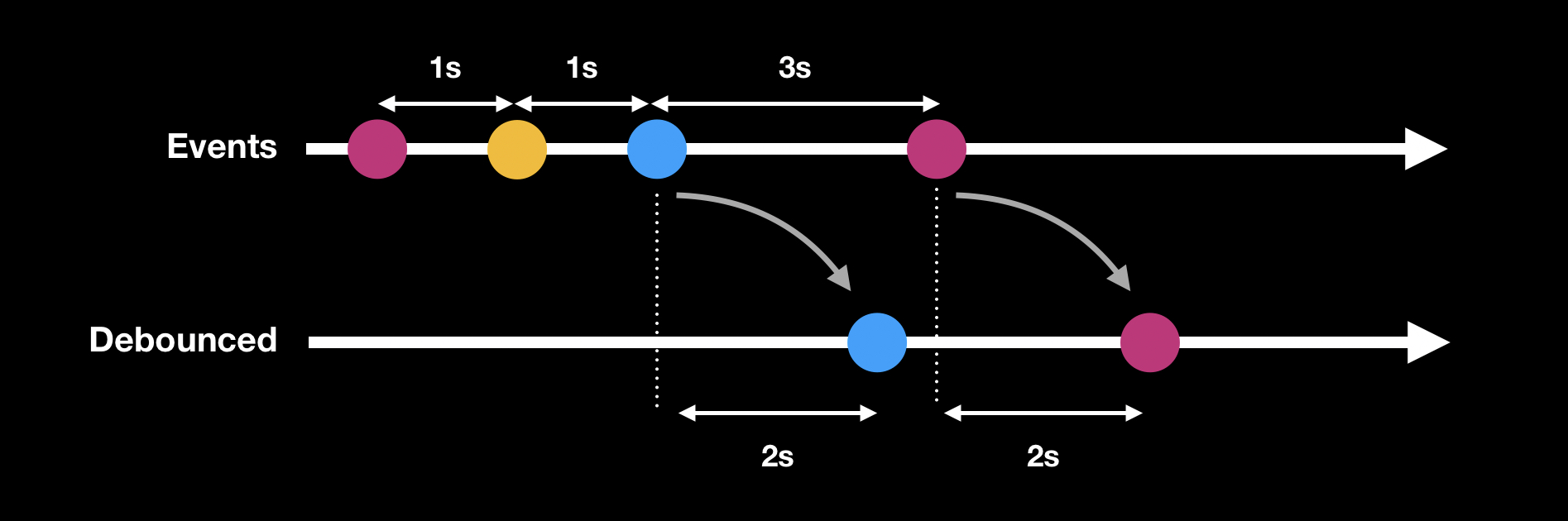

- When the

emit(_:)function is called for the first time, a task is created and it sleeps for 2 seconds. - 1 second after the first task is created,

emit(_:)is called again. The previous task is cancelled, causingTask.sleep(_:)to throw an exception. The first block will not be executed. A new task is created and it sleeps for 2 seconds. - 1 second after the second task is created,

emit(_:)is called again. The previous task is cancelled, causingTask.sleep(_:)to throw an exception. The second block will not be executed. A new task is created and it sleeps for 2 seconds. - 2 seconds after the third task is created, the sleep is finished and the block is executed.

- 1 second after that,

emit(_:)is called for the fourth time. The previous task has already finished (the cancellation will do nothing) and a new task is created. This task sleeps for 2 seconds and then executes the block.

In the end the timeline will be:

We have debounced the stream of events by discarding the first 2 ones.

Although this solution works, it may not be the most resource-efficient. When processing a large number of events, creating numerous tasks can potentially strain system resources. To optimize resource usage, we may want to consider alternative approaches.

Optimized implementation

We need a Task whose lifespan should be extended every time an event occurs before the duration has elapsed. Rather than having a separate Task for each event, we should have a single, longer-lasting Task for bursts of events.

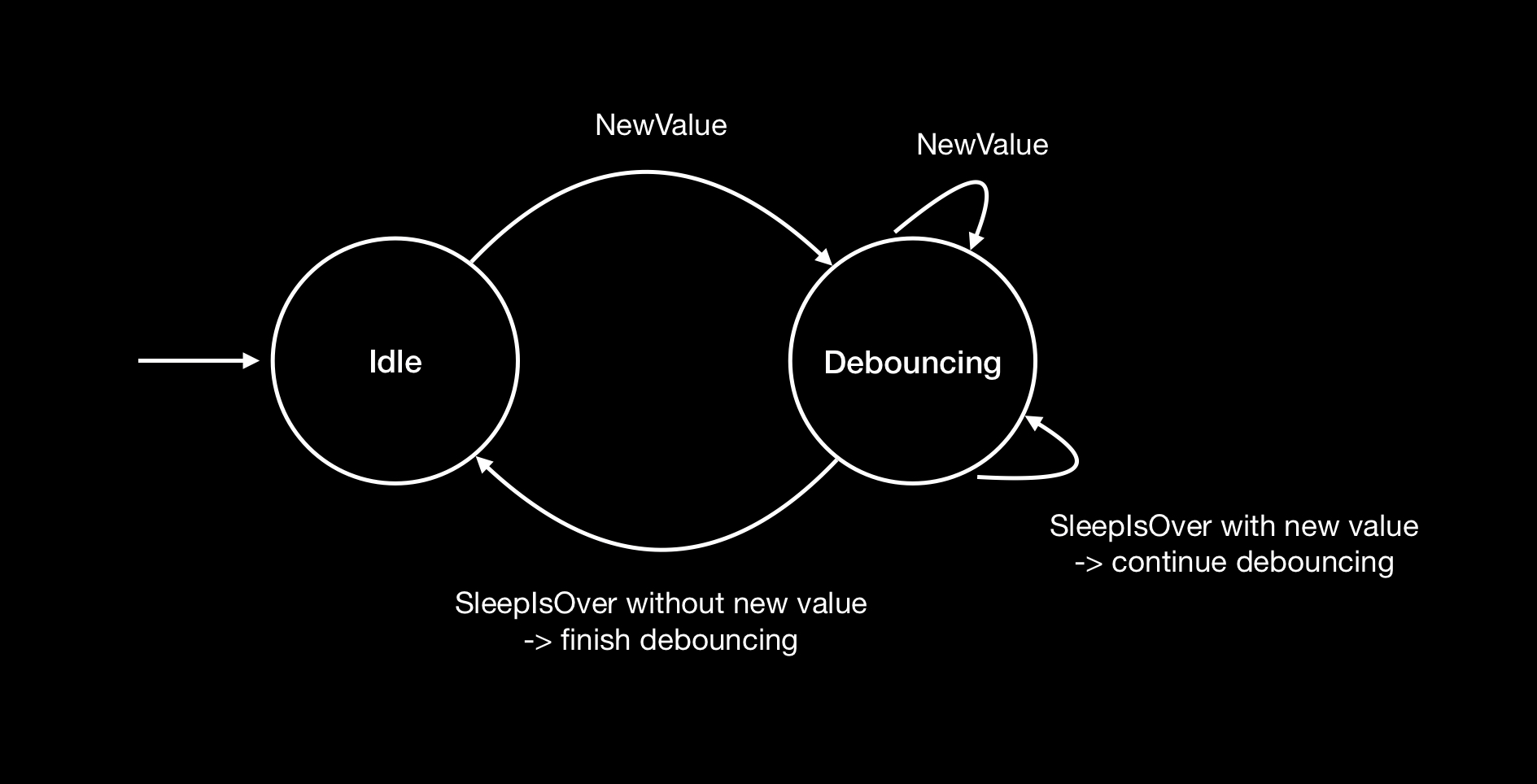

As this can be a bit tricky to implement, we can use a state machine to help identifying what happens.

Basically this state machine will track the latest received value and decide whether or not we should end the debouncing.

struct StateMachine<T> {

enum State {

case idle

case debouncing(value: T, dueTime: ContinuousClock.Instant, isValueDuringSleep: Bool)

}

var state: State

let duration: ContinuousClock.Duration

init(duration: ContinuousClock.Duration) {

self.state = .idle

self.duration = duration

}

mutating func newValue(_ value: T) -> (Bool, ContinuousClock.Instant) {

let dueTime = ContinuousClock.now + duration

switch self.state {

case .idle:

// there is no value being debounced

self.state = .debouncing(value: value, dueTime: dueTime, isValueDuringSleep: false)

// we should start a new task to begin the debounce

return (true, dueTime)

case .debouncing:

// there is already a value being debounced

// the new value takes its place and we update the due time

self.state = .debouncing(value: value, dueTime: dueTime, isValueDuringSleep: true)

// no need to create a new task, we extend the lifespan of the current task

return (false, dueTime)

}

}

enum SleepIsOverAction {

case continueDebouncing(dueTime: ContinuousClock.Instant)

case finishDebouncing(value: T)

}

mutating func sleepIsOver() -> SleepIsOverAction {

switch self.state {

case .idle:

fatalError("inconsistent state, no value was being debounced.")

case .debouncing(let value, let dueTime, true):

// one or more values have been set while sleeping

state = .debouncing(value: value, dueTime: dueTime, isValueDuringSleep: false)

// we have to continue debouncing with the latest value

return .continueDebouncing(dueTime: dueTime)

case .debouncing(let value, _, false):

// no values were set while sleeping

state = .idle

// we can output the latest known value

return .finishDebouncing(value: value)

}

}

}

We can then use that state machine in the Debounce class:

final class SafeStorage<T>: @unchecked Sendable {

private let lock = NSRecursiveLock()

private var stored: T

init(stored: T) {

self.stored = stored

}

func get() -> T {

self.lock.lock()

defer { self.lock.unlock() }

return self.stored

}

func set(stored: T) {

self.lock.lock()

defer { self.lock.unlock() }

self.stored = stored

}

func apply<R>(block: (inout T) -> R) -> R {

self.lock.lock()

defer { self.lock.unlock() }

return block(&self.stored)

}

}

public final class Debounce<T>: Sendable {

private let output: @Sendable (T) async -> Void

private let stateMachine: SafeStorage<StateMachine<T>>

private let task: SafeStorage<Task<Void, Never>?>

public init(

duration: ContinuousClock.Duration,

output: @Sendable @escaping (T) async -> Void

) {

self.stateMachine = SafeStorage(stored: StateMachine(duration: duration))

self.task = SafeStorage(stored: nil)

self.output = output

}

public func emit(value: T) {

let (shouldStartATask, dueTime) = self.stateMachine.apply { machine in

machine.newValue(value)

}

if shouldStartATask {

self.task.set(stored: Task { [output, stateMachine] in

var localDueTime = dueTime

loop: while true {

try? await Task.sleep(until: localDueTime, clock: .continuous)

let action = stateMachine.apply { machine in

machine.sleepIsOver()

}

switch action {

case .finishDebouncing(let value):

await output(value)

break loop

case .continueDebouncing(let newDueTime):

localDueTime = newDueTime

continue loop

}

}

})

}

}

deinit {

self.task.get()?.cancel()

}

}

Let’s break it down a bit:

We have introduced the SafeStorage class, which is a thread-safe wrapper around a value. This class is backed by a lock and is used for two purposes:

- To access the state machine within the context of a

Task, which may involve concurrent accesses. - To ensure that the

Debounceclass isSendableby making theTaskaccess thread-safe. By doing so we allow the debounce operator to be used concurrently if needed.

Then we simply forward the calls to the state machine and applies the resulting actions.

By using this class, we can debounce values in the same way as with the trivial implementation, but with only 2 tasks instead of 4, increasing performance and efficiency.

In the context of a SwiftUI view

Let’s try to debounce a TextField every 500ms.

struct ContentView: View {

private let debounce = Debounce<String>(duration: .milliseconds(500)) { value in

print(value)

}

@State private var text = ""

var body: some View {

TextField("Debounced", text: self.$text)

.onChange(of: self.text) { newText in

self.debounce.emit(value: newText)

}

}

}

With this implementation, we can easily track changes made to a TextField. Every time the TextField is updated, any changes will be printed out after a delay of 500ms without any further activity.

Additionally, this versatile approach can also be used for tracking changes on Buttons.

struct ContentView: View {

private let debounce = Debounce<String>(duration: .milliseconds(500)) { value in

print(value)

}

@State private var text = ""

var body: some View {

Button {

self.debounce.emit(value: "1")

} label: {

Text("Button")

}

}

}

You can nervously click on the button and “1” will be printed once after 500ms of inactivity.

Here it is. I hope you find this reading helpful.

You could even inject the Debounce into the view if you want to use external dependencies to perform the work.

[BONUS] You can take a look Regulate, a lightweight open source library that provides the Debounce and Throttle operators.

Stay tuned.